The second edition of our special session is motivated by the outstanding reception and scholarly engagement achieved in its first edition. This special session focuses on explainability for AI agents that make/learn to support in sequential decision-making tasks, aiming, for example, to reach a goal or maximize a notion of reward. This includes AI agents that choose their actions by planning with a world model, or by learning from experience (e.g., Reinforcement Learning); agents that prepare plans or policies offline, or choose each action while interacting with their environment online.

The focus of this special session contrasts with the extensive body of work on classical interpretable machine learning, which typically emphasizes understanding the individual input-output relationships in “black box” models like deep neural networks. While such explainable models are important, in sequential decision-making problems, intelligent behavior extends over time or in repeated tasks and needs to be explained and understood as such.

The challenge of explaining AI in sequential decision-making tasks, such as that of robots collaborating with humans or software agents engaged in complex sequential tasks, has only recently gained attention. We may have AI agents that can beat us in chess, but can they teach us how to play in each state of the game? What are the consequences of the action suggestions? We may have search and rescue robots, but can we effectively and efficiently work with them in the field?

Autonomous agents are becoming an indispensable technology in countless domains such as manufacturing, (semi-)autonomous cars, and socially assistive robotics. To increase successful AI adoption and acceptance in these fields, we need to better understand the behavior of these agents in sequential decision-making problems, their learning ability and reasoning, and their strengths and weaknesses when following a certain policy or behavior.

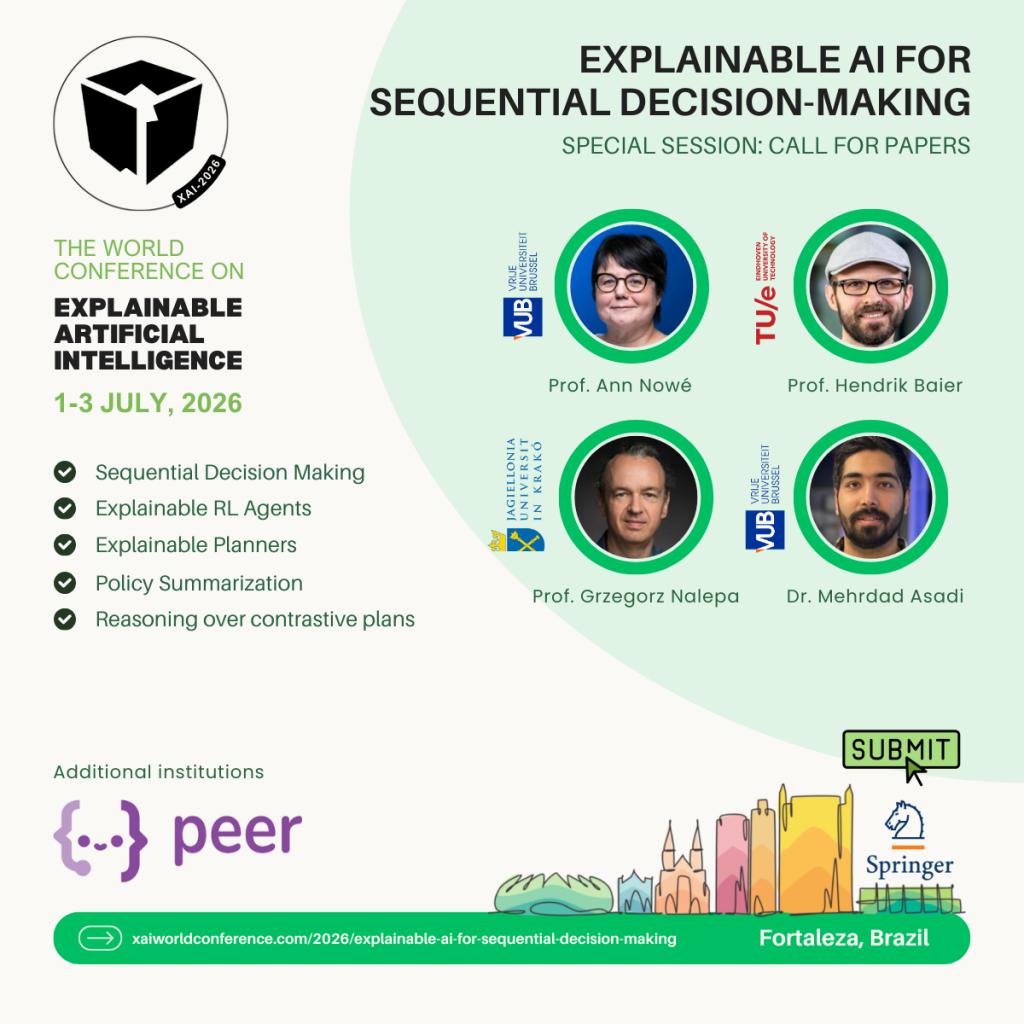

The organizers are members of the PEER project, which focuses on understandable, interactive, and trustworthy sequential decision-making AI systems. In addition to their expertise on the topic and its current challenges, they have extensive experience with the organization of workshops and conferences.

Keywords: Explainable Sequential Decision Making, Explainable RL Agents, Explainable Planners, Policy Summarization, Learning and reasoning over contrastive plans

Topics

- Explainable planning

- Explainable online search

- Interpretable Reinforcement Learning (RL) methods

- Explainable multi-agent policies/behavior

- Explainable multi-objective RL

- Evaluation methods and metrics for policy-level and trajectory-level interpretability

- Explainability via policy or plan summarisation

- Learning and reasoning over contrastive plans or policies

- Advanced frameworks for understanding temporal, multi-step, and cumulative decisions.