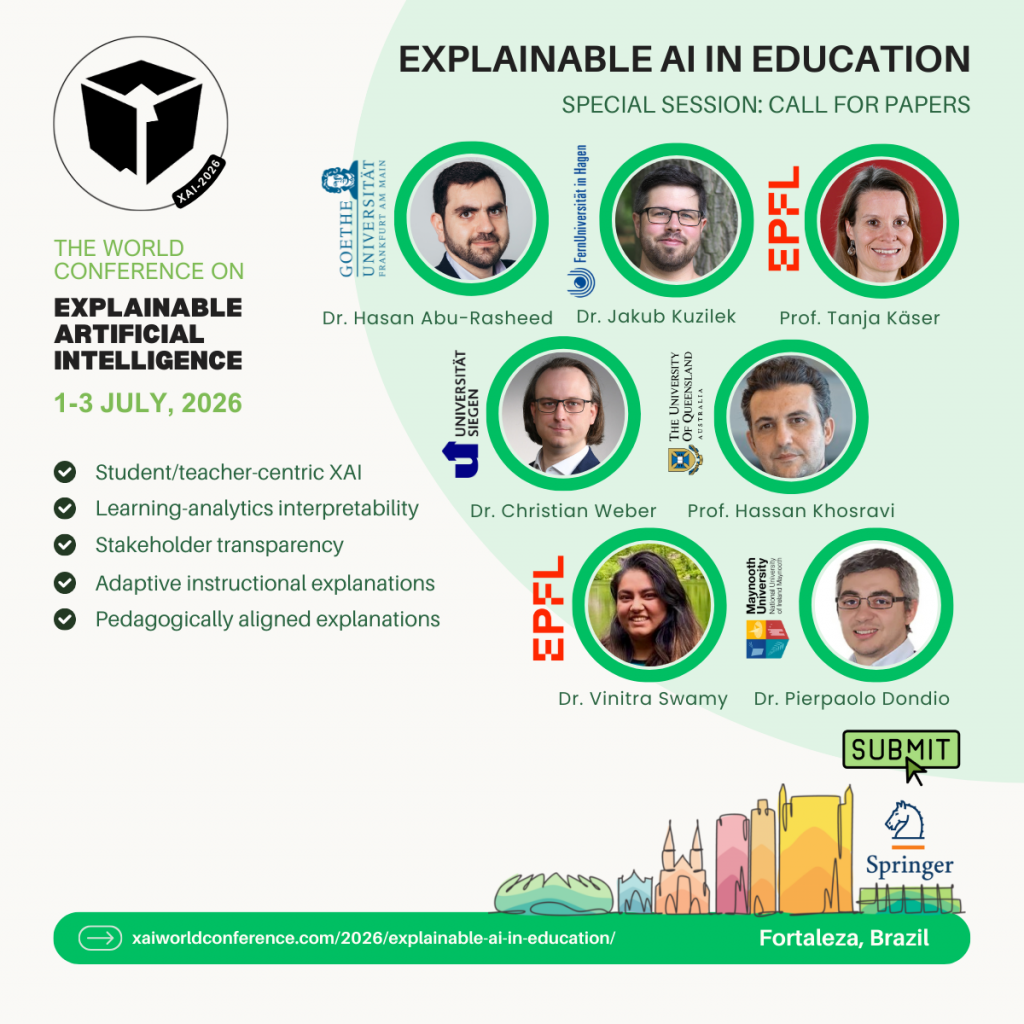

Artificial intelligence (AI) and machine learning (ML), particularly modern ML and deep learning (DL) architectures, including those powering generative AI (Gen-AI), are playing an increasingly important role in educational technologies and are being used in more educational settings. These advanced AI-driven systems support a wide range of educational functions, from adaptive learning and automated assessment to feedback generation and decision support for educational stakeholders. However, despite their promise, the opacity of these models and architectures often hinders understanding and accountability, making it challenging for educational stakeholders to evaluate and trust algorithmic outcomes. Such a lack of transparency and explainability means educators, students, and parents may struggle to make well-informed decisions about the predictions and recommendations generated by these algorithms. As a result, fostering transparency, interpretability, and stakeholder agency has become crucial for the responsible and equitable application of AI in education.

This special session explores how explainability, interpretable machine learning and deep learning methods, and user-centred design, can enhance trust, fairness, and pedagogical alignment across educational settings. It focuses on empowering educational stakeholders, including students, teachers, administrators, and parents, to understand and appropriately act upon AI-generated output. The session brings together researchers and practitioners working at the intersection of machine learning, learning analytics, human–computer interaction, educational psychology, and ethics to explore how interpretable and explainable AI systems can be designed, implemented, and evaluated for educational use.

The session will address questions such as:

- How can pedagogical practices inform the design of AI-based systems that deliver stakeholder-sensitive explanations?

- What metrics, evaluation frameworks, and validation protocols are needed for educational XAI systems built from modern ML architectures?

- How can explainability enhance student autonomy, teacher decision-making, and institutional transparency within AI-supported learning environments?

- How can modern ML architectures, including LLMs and neurosymbolic reasoning systems, produce adaptive, trustworthy, and context-aware explanations of educational predictions and recommendations?

By integrating perspectives from computer science, learning analytics, human–computer interaction, educational psychology, and ethics, the session aims to highlight actionable strategies for developing XAI systems that are technically robust, safe and educationally meaningful. The session’s focus on linking XAI methods to ML models and structures in education will highlight both existing applications and emerging research directions. Ultimately, the session seeks to promote an interdisciplinary dialogue among XAI and education researchers to strengthen the role of explainability in creating equitable, trustworthy, and human-centred AI in education and to ensure that explainability becomes a foundation for responsible innovation rather than a technical afterthought.

Keywords: Pedagogically aligned explanations; Student/teacher-centric XAI; Learning-analytics interpretability; Stakeholder transparency; Adaptive instructional explanations; Classroom-based evaluation; Explainable AI (XAI), Education, Interpretable machine learning, Neurosymbolic reasoning, ML architectures, Educational stakeholders, Responsible AI, XAI evlauation, Stakeholder agency

Topics

Interpretable and Explainable Machine Learning for Education

- Using counterfactual explanations to support student goal setting and teacher intervention.

- Post-hoc model explanations for prediction tasks in learning analytics (e.g., SHAP or LIME explaining dropout-risk models).

- Intrinsically interpretable models for student performance prediction (e.g., rule-based models, generalized additive models).

Deep Learning and LLM-Based Educational Systems

- Generating context-aware explanations from LLM-powered tutoring systems.

- Explaining deep learning models used for automated feedback on writing, coding, or problem solving.

- Calibrating and communicating uncertainty in LLM, e.g. through Chain-of Thought explanations, to avoid over-trust and over-reliance.

Pedagogically Grounded XAI Design

- Designing AI explainability aligned with learning theories and pedagogical practices (e.g., scaffolding, cognitive load theory, self-regulated learning).

- Adaptive explanations tailored to learner profiles, prior knowledge, or affective states.

- Explaining ML model recommendations to support formative assessment practices.

Stakeholder-Sensitive XAI

- Explanation interfaces for teachers supporting instructional planning.

- Explanations for institutional stakeholders ensuring accountability in algorithmic decision-making (e.g., resource allocation tools).

- Youth-appropriate explanations for minors interacting with AI-enabled learning tools.

Neurosymbolic and Hybrid AI in Education

- Using neurosymbolic reasoning to generate structured, pedagogically meaningful explanations of AI-supported educational tools.

- Hybrid systems combining rules, ontologies, and deep models to explain concept mastery or misconception patterns.

Human-Centered Evaluation of Educational XAI

- Metrics for XAI usefulness, trust calibration, fairness perception, and actionability.

- Classroom-based validation of explainable ML pipelines.

- Evaluating long-term impact of XAI on learning outcomes and teacher practices.

Ethical, Fair, and Transparent AI in Education

- Detecting and explaining bias in educational ML models (e.g., demographic disparities in predictive risk scores).

- Communicating limitations and risks of model predictions to non-expert stakeholders through XAI.

- Policy implications for explainability in educational AI governance.

Practical Applications & Case Studies

- Explainable recommendation systems in adaptive learning platforms.

- Transparent AI-supported tutoring systems that explain automatically generated feedback.

- XAI dashboards for students and teachers interpreting learning analytics outputs.